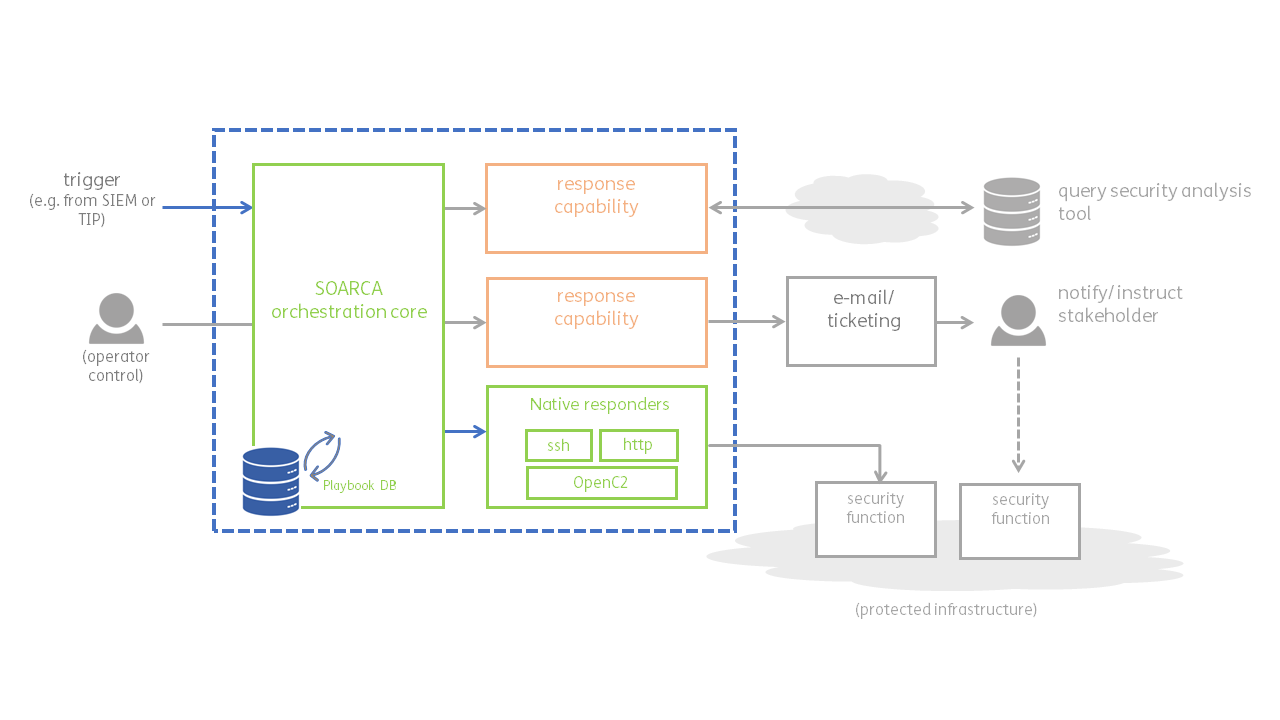

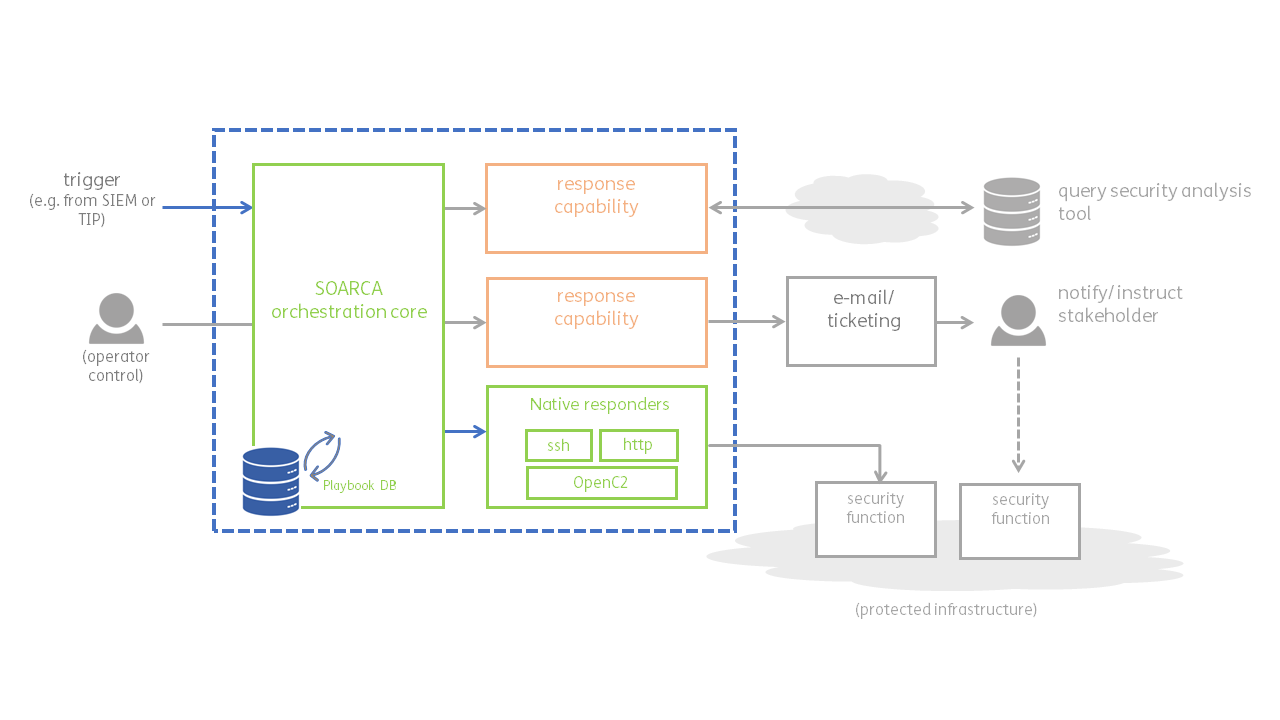

Design - core components

The design of SOARCA

SOARCA consists of several key components:

- SOARCA Core: This is the heart of SOARCA, represented in green.

- SOARCA Native Capabilities: These are the functionalities explicitly defined in the Cacao v2 specification and are integral to the core. They are also represented in green.

- Fins: These are the extension capabilities, also known as Fins. They enhance the functionality and integration of SOARCA and are depicted in orange. These are not (yet) part of this repository, but may be implemented by partners or TNO in the future.

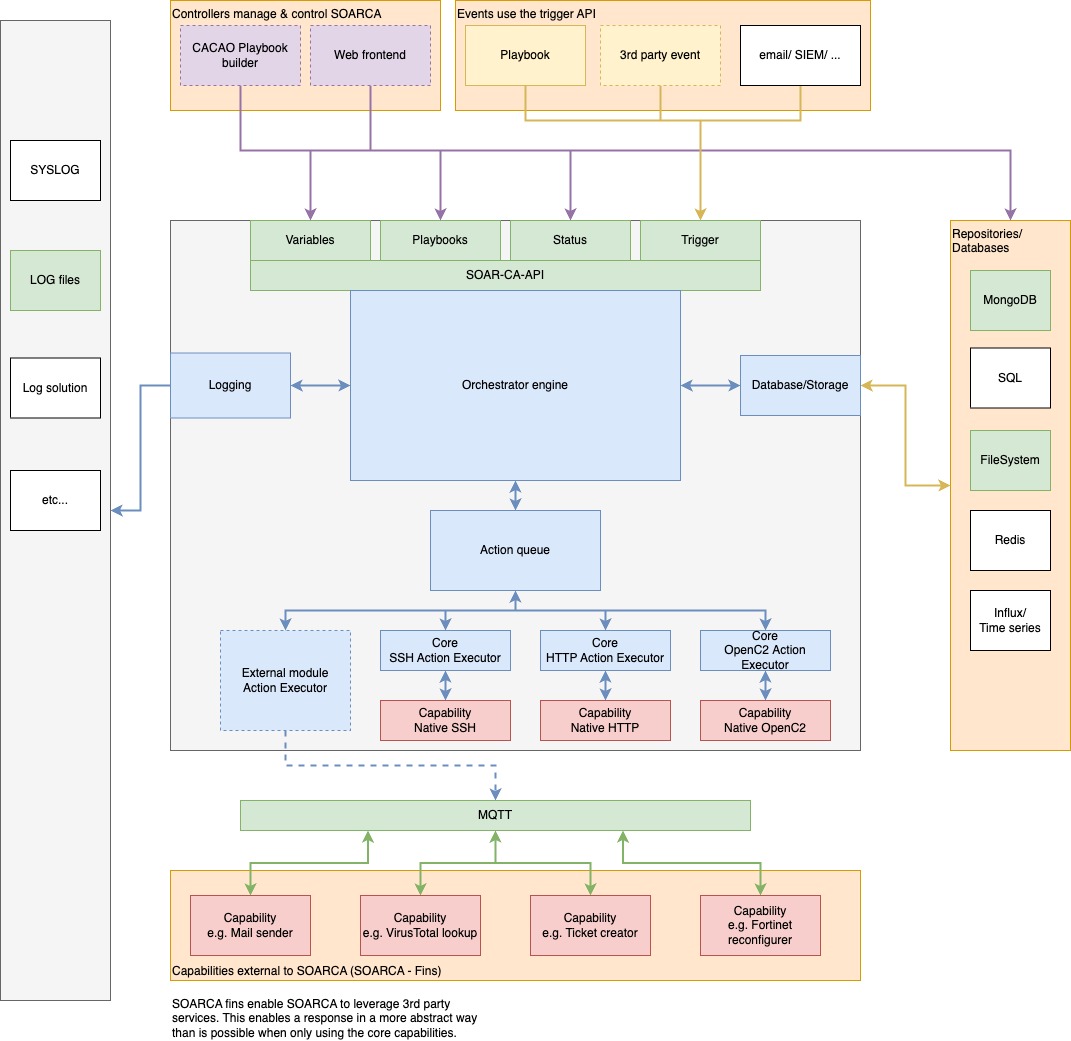

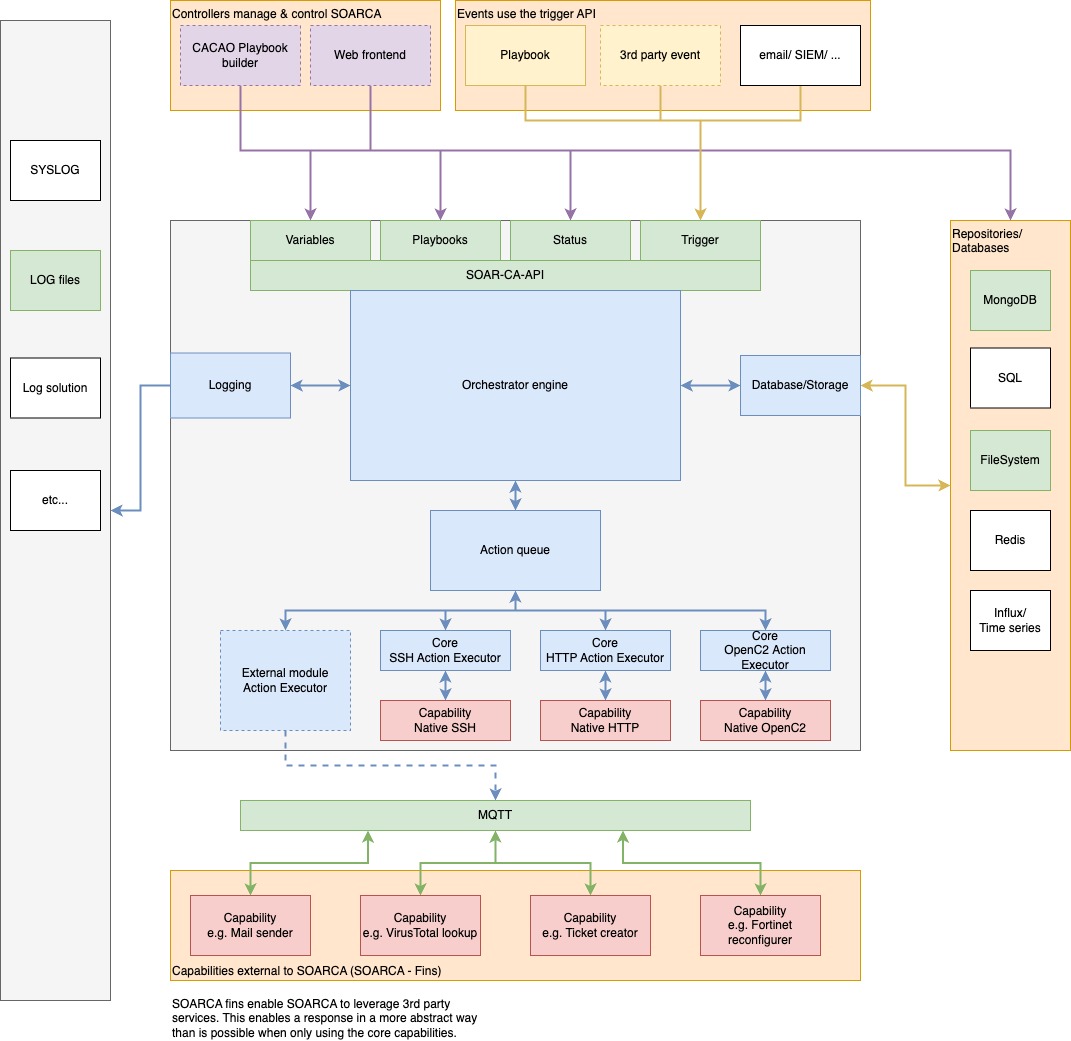

Core component overview

SOARCA interacts with many components, the following diagram shows the intended setup for SOARCA and its components. Currently, not all parts are fully implemented. Keep track of the release notes to see what’s implemented and what needs some work.

1 - Application design

Details of the application architecture for SOARCA

Design decisions and core dependencies

To allow for fast execution and type-safe development SOARCA is developed in go. The application application can be deployed in Docker. Further dependencies are MQTT for the module system and go-gin for the REST API.

The overview on this page is aimed to guide you through the SOARCA architecture and components as well as the main flow.

Components

Components of SOARCA are displayed in the component diagram.

- Green is implemented

- Orange has limited functionality

- Red is not started but will be added in future releases

@startuml

set separator ::

protocol /playbook #lightgreen

protocol /trigger #lightgreen

protocol /status #lightgreen

protocol /reporter #lightgreen

protocol /manual #lightgreen

protocol /step #red

protocol /trusted/variables #red

class reporter::cache #orange

class core::decomposer #lightgreen

class core::executor #lightgreen

class interaction::interaction #lightgreen

class internal::controller #lightgreen

class internal::database #lightgreen

class internal::logger #lightgreen

class api::playbook #lightgreen

class api::trigger #lightgreen

class core::capability::manual #lightgreen

class core::capability::http #lightgreen

class core::capability::ssh #lightgreen

class core::capability::openC2 #lightgreen

class core::capability::fin #lightgreen

class core::capability::powershell #lightgreen

class api::status #lightgreen

class api::reporter #lightgreen

class api::manual #lightgreen

class api::step #red

class api::variables #red

"/step" *-- api::step

"/playbook" *-- api::playbook

"/trigger" *-- api::trigger

"/status" *-- api::status

"/reporter" *-- api::reporter

"/manual" *-- api::manual

"/trusted/variables" *-- api::variables

api *-down- internal::controller

internal::controller -* database

logger *- internal::controller

internal::controller -down-* core

api::reporter -down-> reporter::cache

reporter::cache <-- core::decomposer

reporter::cache <-- core::executor

api::playbook --> internal::database

api::trigger --> core::decomposer

api::trigger --> internal::database

api::manual --> interaction::interaction

core::decomposer -down-> core::executor

core::executor --> core::capability::openC2

core::executor --> core::capability::fin

core::executor --> core::capability::http

core::executor --> core::capability::ssh

core::executor --> core::capability::powershell

core::executor --> core::capability::manual

interaction::interaction <-- core::capability::manual

@enduml

Classes

This diagram consists of the class structure used by SOARCA

@startuml

interface IPlaybook

interface IStatus

interface ITrigger

Interface IPlaybookDatabase

Interface IDatabase

Interface ICapability

Interface IDecomposer

Interface IExecuter

class Controller

class Decomposer

class PlaybookDatabase

class Status

class Mongo

class Capability

Class Executer

IPlaybook <|.. Playbook

ITrigger <|.. Trigger

IStatus <|.. Status

ICapability <|.. Capability

IExecuter <|.. Executer

Trigger -> IPlaybookDatabase

IPlaybookDatabase <- Playbook

IPlaybookDatabase <|.. PlaybookDatabase

IDatabase <-up- PlaybookDatabase

IDatabase <|.. Mongo

IDecomposer <- Trigger

IDecomposer <|.. Decomposer

IExecuter <- Decomposer

ICapability <- Executer

@enduml

Controller

The SOARCA controller will create all classes needed by SOARCA. The controller glues the api and decomposer together. Each run will instantiate a new decomposer.

interface IPlaybook{

void Get()

void Get(PlaybookId id)

void Add(Playbook playbook)

void Update(Playbook playbook)

void Remove(Playbook playbook)

}

interface IStatus{

}

interface ITrigger{

void TriggerById(PlaybookId id)

void Trigger(Playbook playbook)

}

Interface IPlaybookDatabase

Interface IDecomposer

Interface IExecuter

class Trigger

class Controller

class Decomposer

IPlaybook <|.. Playbook

ITrigger <|.. Trigger

IStatus <|.. Status

Trigger -> IPlaybookDatabase

IPlaybookDatabase <- Playbook

IPlaybookDatabase <|.. PlaybookDatabase

IDecomposer <- Trigger

IDecomposer <|.. Decomposer

IExecuter -> Decomposer

Main application flow

These sequences will show a simplified overview of how the SOARCA components interact.

The main flow of the application is the following. Execution will start by processing the JSON formatted CACAO playbook if successful the playbook is handed over to the Decomposer. This is where the playbook is decomposed into its parts and passed step by step to the executor. These operations will block the API until execution is finished. For now, no variables are exposed via the API to the caller.

actor Caller

Caller -> Api

Api -> Trigger : POST /trigger

participant Reporter

Trigger -> Decomposer : Trigger playbook as ad-hoc execution

Trigger <-- Decomposer : execution details

Api <-- Trigger : execution details

Caller <-- Api

loop for each step

Decomposer -> Executor : Send step to executor

Executor -> Executor : select capability (ssh selected)

Executor -> Ssh : Command

Executor <-- Ssh : return

Decomposer <-- Executor

Reporter <-- Decomposer : Step execution results

else execution failure (break loop)

Executor <-- Ssh : error

Decomposer <-- Executor: error

Reporter <-- Decomposer : Step execution results

Decomposer -> Decomposer : stop execution

end

Api -> Reporter : GET /reporter/execution-id

Api <-- Reporter : response data

2 - API Description

Descriptions for the SOARCA REST API endpoints

Endpoint description

We will use HTTP status codes https://en.wikipedia.org/wiki/List_of_HTTP_status_codes

@startuml

protocol UiEndpoint {

GET /playbook

GET /playbook/meta

POST /playbook

GET /playbook/playbook-id

PUT /playbook/playbook-id

DELETE /playbook/playbook-id

POST /trigger/playbook

POST /trigger/playbook/id

GET /step

GET /status

GET /status/playbook

GET /status/playbook/id

GET /status/history

}

@enduml

General messages

Error

When an error occurs a 400 status is returned with the following JSON payload, the original call can be omitted in production for security reasons.

responses: 400/Bad request

@startjson

{

"status": "400",

"message": "What went wrong.",

"original-call": "<optional> Request JSON data",

"downstream-call" : "<optional> downstream call JSON"

}

@endjson

Unauthorized

When the caller does not have valid authentication 401/unauthorized will be returned.

cacao playbook JSON

@startjson

{

"type": "playbook",

"spec_version": "cacao-2.0",

"id": "playbook--91220064-3c6f-4b58-99e9-196e64f9bde7",

"name": "coa flow",

"description": "This playbook will trigger a specific coa",

"playbook_types": ["notification"],

"created_by": "identity--06d8f218-f4e9-4f9f-9108-501de03d419f",

"created": "2020-03-04T15:56:00.123456Z",

"modified": "2020-03-04T15:56:00.123456Z",

"revoked": false,

"valid_from": "2020-03-04T15:56:00.123456Z",

"valid_until": "2020-07-31T23:59:59.999999Z",

"derived_from": [],

"priority": 1,

"severity": 1,

"impact": 1,

"industry_sectors": ["information-communications-technology", "research", "non-profit"],

"labels": ["soarca"],

"external_references": [

{

"name": "TNO SOARCA",

"description": "SOARCA Homepage",

"source": "TNO - COSSAS - HxxPS://LINK-TO-CODE-REPO.TLD",

"url": "HxxPS://LINK-TO-CODE-REPO.TLD",

"hash": "00000000000000000000000000000000000000000000000000000000000",

"external_id": "TNO/SOARCA 2023.01"

}

],

"features": {

"if_logic": true,

"data_markings": false

},

"markings": [],

"playbook_variables": {

"$$flow_data_location$$": {

"type": "string",

"value": "<mongodb_location>",

"description": "location of event and flow data",

"constant": true

},

"$$event_type$$": {

"type" : "string",

"value": "<event_type_string>",

"description": "type of incomming event / trigger",

"constant": true

}

},

"workflow_start": "step--d737c35f-595e-4abf-83ef-d0b6793556b9",

"workflow_exception": "step--40131926-89e9-44df-a018-5f92f2df7914",

"workflow": {

"step--5ea28f63-ac32-4e5e-bd0c-757a50a3a0d7":{

"type": "single",

"name": "BI for CoAs",

"delay": 0,

"timeout": 30000,

"command": {

"type": "http-api",

"command": "hxxps://our.bi/key=VALUE"

},

"on_success": "step--71b15428-275a-49b5-9f09-3944972a0054",

"on_failure": "step--71b15428-275a-49b5-9f09-3944972a0054"

},

"step--71b15428-275a-49b5-9f09-3944972a0054": {

"type": "end",

"name": "End Playbook SOARCA Main Flow"

}

},

"targets": {

},

"extension_definitions": { }

}

@endjson

/playbook

The playbook endpoints are used to create playbooks in SOARCA, new playbooks can be added, and current ones edited and deleted.

GET /playbook

Get all playbook ids that are currently stored in SOARCA.

Call payload

None

Response

200/OK with payload:

@startjson

[

{

"type": "playbook",

"etc" : "etc..."

}

]

@endjson

Error

400/BAD REQUEST with payload:

General error

Get all playbook ids that are currently stored in SOARCA.

Call payload

None

Response

200/OK with payload:

@startjson

[

{

"id": "<playbook id>",

"name": "<playbook name>",

"description": "<playbook description>",

"created": "<creation data time>",

"valid_from": "<valid from date time>",

"valid_until": "<valid until date time>",

"labels": ["label 1","label 2"]

}

]

@endjson

Error

400/BAD REQUEST with payload:

General error

POST /playbook

Create a new playbook and store it in SOARCA. The format is

Payload

@startjson

{

"type": "playbook",

"etc" : "etc..."

}

@endjson

Response

201/CREATED

@startjson

{

"type": "playbook",

"etc" : "etc..."

}

@endjson

Error

400/BAD REQUEST with payload: General error, 409/CONFLICT if the entry already exists

GET /playbook/{playbook-id}

Get playbook details

Call payload

None

Response

200/OK with payload:

@startjson

{

"<cacao-playbook> (json)"

}

@endjson

Error

400/BAD REQUEST

PUT `/playbook/{playbook-id}``

An existing playbook can be updated with PUT.

Call payload

A playbook like cacao playbook JSON

Response

200/OK with the edited playbook cacao playbook JSON

Error

400/BAD REQUEST for malformed request

When updated it will return 200/OK or General error in case of an error.

DELETE /playbook/{playbook-id}

An existing playbook can be deleted with DELETE. When removed it will return 200/OK or general error in case of an error.

Call payload

None

Response

200/OK if deleted

Error

400/BAD REQUEST if the resource does not exist

POST /trigger/playbook/xxxxxxxx-xxxx-Mxxx-Nxxx-xxxxxxxxxxxx

Execute playbook with a specific id

Call payload

None

Response

Will return 200/OK when finished with playbook playbook.

@startjson

{

"execution-id": "xxxxxxxx-xxxx-Mxxx-Nxxx-xxxxxxxxxxxx",

"playbook-id": "xxxxxxxx-xxxx-Mxxx-Nxxx-xxxxxxxxxxxx"

}

@endjson

Error

400/BAD REQUEST general error on error.

POST /trigger/playbook

Execute an ad-hoc playbook

Call payload

A playbook like cacao playbook JSON

Response

Will return 200/OK when finished with the playbook.

@startjson

{

"execution-id": "xxxxxxxx-xxxx-Mxxx-Nxxx-xxxxxxxxxxxx",

"playbook-id": "xxxxxxxx-xxxx-Mxxx-Nxxx-xxxxxxxxxxxx"

}

@endjson

Error

400/BAD REQUEST general error on error.

/step [NOT in SOARCA V1.0]

Get capable steps for SOARCA to allow a coa builder to generate or build valid coa’s

GET /step

Get all available steps for SOARCA.

Call payload

None

Response

200/OK

@startjson

{

"steps": [{

"module": "executor-module",

"category" : "analyses",

"context" : "external",

"step--5ea28f63-ac32-4e5e-bd0c-757a50a3a0d7":{

"type": "single",

"name": "BI for CoAs",

"delay": 0,

"timeout": 30000,

"command": {

"type": "http-api",

"command": "hxxps://our.bi/key=VALUE"

},

"on_success": "step--71b15428-275a-49b5-9f09-3944972a0054",

"on_failure": "step--71b15428-275a-49b5-9f09-3944972a0054"

}}]

}

@endjson

Module is the executing module name that will do the executer call.

Category defines what kind of step is executed:

@startuml

enum workflowType {

analyses

action

asset-look-up

etc...

}

@enduml

Context will define whether the call is internal or external:

@startuml

enum workflowType {

internal

external

}

@enduml

Error

400/BAD REQUEST general error on error.

/status

The status endpoints are used to get various statuses.

GET /status

Call this endpoint to see if SOARCA is up and ready. This call has no payload body.

Call payload

None

Response

200/OK

@startjson

{

"version": "1.0.0",

"runtime": "docker/windows/linux/macos/other",

"mode" : "development/production",

"time" : "2020-03-04T15:56:00.123456Z",

"uptime": {

"since": "2020-03-04T15:56:00.123456Z",

"milis": "uptime in miliseconds"

}

}

@endjson

Error

5XX/Internal error, 500/503/504 message.

GET /status/fins | not implemented

Call this endpoint to see if SOARCA Fins are up and ready. This call has no payload body.

Call payload

None

Response

200/OK

@startjson

{

"fins": [

{

"name": "Fin name",

"status": "ready/running/failed/stopped/...",

"id": "The fin UUID",

"version": "semver verison: 1.0.0"

}

]

}

@endjson

Error

5XX/Internal error, 500/503/504 message.

GET /status/reporters | not implemented

Call this endpoint to see which SOARCA reportes are used. This call has no payload body.

Call payload

None

Response

200/OK

@startjson

{

"reporters": [

{

"name": "Reporter name"

}

]

}

@endjson

Error

5XX/Internal error, 500/503/504 message.

GET /status/ping

See if SOARCA is up this will only return if all SOARCA services are ready

Call payload

None

Response

200/OK

pong

Usage example flow

Stand alone

@startuml

participant "SWAGGER" as gui

control "SOARCA API" as api

control "controller" as controller

control "Executor" as exe

control "SSH-module" as ssh

gui -> api : /trigger/playbook with playbook body

api -> controller : execute playbook playload

controller -> exe : execute playbook

exe -> ssh : get url from log

exe <-- ssh : return result

controller <-- exe : results

api <-- controller: results

@enduml

Database load and execution

@startuml

participant "SWAGGER" as gui

control "SOARCA API" as api

control "controller" as controller

database "Mongo" as db

control "Executor" as exe

control "SSH-module" as ssh

gui -> api : /trigger/playbook/playbook--91220064-3c6f-4b58-99e9-196e64f9bde7

api -> controller : load playbook from database

controller -> db: retreive playbook

controller <-- db: playbook json

controller -> controller: validate playbook

controller -> exe : execute playbook

exe -> ssh : get url from log

exe <-- ssh : return result

controller <-- exe : results

api <-- controller: results

@enduml

3 - Reporter API Description

Descriptions for the Reporter REST API endpoints

Endpoint description

We will use HTTP status codes https://en.wikipedia.org/wiki/List_of_HTTP_status_codes

@startuml

protocol Reporter {

GET /reporter

GET /reporter/{execution-id}

}

@enduml

/reporter

The reporter endpoint is used to fetch information about ongoing playbook executions in SOARCA

GET /reporter

Get all execution IDs of currently ongoing executions.

Call payload

None

Response

200/OK with payload:

@startjson

[

{

"executions": [

{"execution_id" : "1", "playbook_id" : "a", "started" : "<timestamp>", "..." : "..."},

"..."]

}

]

@endjson

Error

400/BAD REQUEST with payload:

General error

GET /reporter/{execution-id}

Get information about ongoing execution

Call payload

None

Response

Response data model:

| field | content | type | description |

|---|

| type | “execution_status” | string | The type of this content |

| id | UUID | string | The id of the execution |

| execution_id | UUID | string | The id of the execution |

| playbook_id | UUID | string | The id of the CACAO playbook executed by the execution |

| started | timestamp | string | The time at which the execution of the playbook started |

| ended | timestamp | string | The time at which the execution of the playbook ended (if so) |

| status | execution-status-enum | string | The current status of the execution |

| status_text | explanation | string | A natural language explanation of the current status or related info |

| step_results | step_results | dictionary | Map of step-id to related step execution data |

| request_interval | seconds | integer | Suggests the polling interval for the next request (default suggested is 5 seconds). |

Step execution data

| field | content | type | description |

|---|

| step_id | UUID | string | The id of the step being executed |

| started | timestamp | string | The time at which the execution of the step started |

| ended | timestamp | string | The time at which the execution of the step ended (if so) |

| status | execution-status-enum | string | The current status of the execution of this step |

| status_text | explanation | string | A natural language explanation of the current status or related info |

| executed_by | entity-identifier | string | The entity executed the workflow step. This can be an organization, a team, a role, a defence component, etc. |

| commands_b64 | list of base64 | list of string | A list of Base64 encodings of the commands that were invoked during the execution of a workflow step, including any values stemming from variables. These are the actual commands executed. |

| error | error | string | Error raised along the execution of the step |

| variables | cacao variables | dictionary | Map of cacao variables handled in the step (both in and out) with current values and definitions |

| automated_execution | boolean | string | This property identifies if the workflow step was executed manually or automatically. It is either true or false. |

Execution stataus

Table from Cyentific RNI workflow Status

Vocabulary Name: execution-status-enum

| Property Name | Description |

|---|

| successfully_executed | The workflow step was executed successfully (completed). |

| failed | The workflow step failed. |

| ongoing | The workflow step is in progress. |

| server_side_error | A server-side error occurred. |

| client_side_error | A client-side error occurred. |

| timeout_error | A timeout error occurred. The timeout of a CACAO workflow step is specified in the “timeout” property. |

| exception_condition_error | A exception condition error ocurred. A CACAO playbook can incorporate an exception condition at the playbook level and, in particular, with the “workflow_exception” property. |

If the execution has completed and no further steps need to be executed

200/OK

with payload:

@startjson

[

{

"type" : "execution-status",

"id" : "<execution-id>",

"execution_id" : "<execution-id>",

"playbook_id" : "<playbook-id>",

"started" : "<time-string>",

"ended" : "<time-string>",

"status" : "<status-enum-value>",

"status_text": "<status description>",

"errors" : ["error1", "..."],

"step_results" : {

"<step-id-1>" : {

"execution_id": "<execution-id>",

"step_id" : "<step-id>",

"started" : "<time-string>",

"ended" : "<time-string>",

"status" : "<status-enum-value>",

"status_text": "<status description>",

"errors" : ["error1", "..."],

"variables": {

"<variable-name-1>" : {

"type": "<type>",

"name": "<variable-name>",

"description": "<description>",

"value": "<value>",

"constant": "<true/false>",

"external": "<true/false>"

}

}

}

},

"request_interval" : "<n-seconds>"

}

]

@endjson

The payload will include all information that the finished execution has created.

If the execution is still ongoing:

206/Partial Content

with payload equal to the 200 response, but impliclty not including all information from the execution, since the execution is still ongoing.

The step results object will list the steps that have been executed until the report request, and those that are being executed at the moment of the report request.

The "request_interval" suggests the polling interval for the next request (default suggested is 5 seconds).

#### Error

400/BAD REQUEST with payload:

General error

404/NOT FOUND

No execution with the specified ID was found.

4 - Manual API Description

Descriptions for the SOARCA manual interaction REST API endpoints

Endpoint descriptions

We will use HTTP status codes https://en.wikipedia.org/wiki/List_of_HTTP_status_codes

@startuml

protocol Manual {

GET /manual

GET /manual/{execution-id}/{step-id}

POST /manual/continue

}

@enduml

/manual

The manual interaction endpoint for SOARCA

GET /manual

Get all pending manual actions objects that are currently waiting in SOARCA.

Call payload

None

Response

200/OK with body a list of:

| field | content | type | description |

|---|

| type | execution-status | string | The type of this content |

| execution_id | UUID | string | The id of the execution |

| playbook_id | UUID | string | The id of the CACAO playbook executed by the execution |

| step_id | UUID | string | The id of the step executed by the execution |

| description | description of the step | string | The description from the workflow step |

| command | command | string | The command for the agent either command |

| command_is_base64 | true | false | bool | Indicates if the command is in Base64 |

| target | cacao agent-target | object | Map of cacao agent-target with the target(s) of this command |

| out_args | cacao variables | dictionary | Map of cacao variables handled in the step out args with current values and definitions |

@startjson

[ {

"type" : "manual-step-information",

"execution_id" : "<execution-id>",

"playbook_id" : "<playbook-id>",

"step_id" : "<step-id>",

"command" : "<command here>",

"command_is_base64" : "false",

"targets" : {

"__target1__" : {

"type" : "<agent-target-type-ov>",

"name" : "<agent name>",

"description" : "<some description>",

"location" : "<.>",

"agent_target_extensions" : {}

}

},

"out_args": {

"<variable-name-1>" : {

"type": "<type>",

"name": "<variable-name>",

"description": "<description>",

"value": "<value>",

"constant": "<true/false>",

"external": "<true/false>"

}

}

}

]

@endjson

Error

400/BAD REQUEST with payload:

General error

GET /manual/<execution-id>/<step-id>

Get pending manual actions objects that are currently waiting in SOARCA for specific execution.

Call payload

None

Response

200/OK with body:

| field | content | type | description |

|---|

| type | execution-status | string | The type of this content |

| execution_id | UUID | string | The id of the execution |

| playbook_id | UUID | string | The id of the CACAO playbook executed by the execution |

| step_id | UUID | string | The id of the step executed by the execution |

| description | description of the step | string | The description from the workflow step |

| command | command | string | The command for the agent either command |

| command_is_base64 | true | false | bool | Indicates if the command is in Base64 |

| targets | cacao agent-target | dictionary | Map of cacao agent-target with the target(s) of this command |

| out_args | cacao variables | dictionary | Map of cacao variables handled in the step out args with current values and definitions |

@startjson

{

"type" : "manual-step-information",

"execution_id" : "<execution-id>",

"playbook_id" : "<playbook-id>",

"step_id" : "<step-id>",

"command" : "<command here>",

"command_is_base64" : "false",

"targets" : {

"__target1__" : {

"type" : "<agent-target-type-ov>",

"name" : "<agent name>",

"description" : "<some description>",

"location" : "<.>",

"agent_target_extensions" : {}

}

},

"out_args": {

"<variable-name-1>" : {

"type": "<type>",

"name": "<variable-name>",

"description": "<description>",

"value": "<value>",

"constant": "<true/false>",

"external": "<true/false>"

}

}

}

@endjson

Error

404/Not found with payload:

General error

POST /manual/continue

Respond to manual command pending in SOARCA, if out_args are defined they must be filled in and returned in the payload body. Only value is required in the response of the variable. You can however return the entire object. If the object does not match the original out_arg, the call we be considered as failed.

Call payload

| field | content | type | description |

|---|

| type | execution-status | string | The type of this content |

| execution_id | UUID | string | The id of the execution |

| playbook_id | UUID | string | The id of the CACAO playbook executed by the execution |

| step_id | UUID | string | The id of the step executed by the execution |

| response_status | enum | string | success indicates successfull fulfilment of the manual request. failure indicates failed satisfaction of the request |

| response_out_args | cacao variables | dictionary | Map of cacao variables names to cacao variable struct. Only name, type, and value are mandatory |

@startjson

{

"type" : "manual-step-response",

"execution_id" : "<execution-id>",

"playbook_id" : "<playbook-id>",

"step_id" : "<step-id>",

"response_status" : "success | failure",

"response_out_args": {

"<variable-name-1>" : {

"type": "<variable-type>",

"name": "<variable-name>",

"value": "<value>",

"description": "<description> (ignored)",

"constant": "<true/false> (ignored)",

"external": "<true/false> (ignored)"

}

}

}

@endjson

Response

200/OK with payload:

Generic execution information

Error

400/BAD REQUEST with payload:

General error

5 - Decomposer

Playbook deconstructor architecture

Decomposer structure

The decomposer will parse playbook objects to individual steps. This allows it to schedule new executor tasks.

Each incoming playbook will executed individually. Decomposing is done up to the step level.

Warning

SOARCA 1.0.x will only support steps of type action

struct ExecutionDetails{

uuid executionId

uuid playbookId

}

Interface IDecomposer{

ExecutionDetails, error Execute(cacao playbook)

error getStatus(uuid playbookId)

}

Interface IExecutor

class Controller

class Decomposer

IDecomposer <- Controller

IDecomposer <|.. Decomposer

IExecutor <- Decomposer

IExecutor

Interface for interfacing with the Executor this will in turn select and execute the command on the right module or fin.

Execution details

The struct contains the details of the execution (execution id which is created for every execution) and the playbook id. The combination of these is unique.

Decomposition of playbook

participant caller

participant "Playbook decomposer" as decomposer

participant "Playbook state" as queue

participant Executor as exe

caller -> decomposer: Execute

caller <-- decomposer: ExecutionStatus

decomposer -> queue: store state

decomposer <-- queue:

loop for all playbook steps

decomposer -> queue: load state

decomposer <-- queue:

decomposer -> decomposer: parse step

decomposer -> decomposer: parse command

decomposer -> exe: execute command

note over exe: correct executer is selected

... Time has passed ...

decomposer <-- exe

end loop

6 - Executer

Design of the SOARCA step executer

Components

The executor consists of the following components.

- Action executor

- Playbook action executor

- if-condition executor

- while-condition executor

- parallel executor

The decomposer interacts with every executor type. They all have separate interfaces to handle new step types in the future without changing the current interfaces.

package action{

interface IExecutor {

..., err Execute(...)

}

}

package playbookaction{

interface IExecutor {

..., err Execute(...)

}

}

package ifcondition{

interface IExecutor {

..., err Execute(...)

}

}

package whilecondition{

interface IExecutor {

..., err Execute(...)

}

}

package parallel{

interface IExecutor {

..., err Execute(...)

}

}

interface ICapability{

variables, error Execute(Metadata, command, variable[], target, agent)

string GetModuleName()

}

class "Decomposer" as decomposer

class "Action Executor" as Executor

class "Playbook Executor" as playbook

class "Parallel Executor" as parallelexecutor

class "While Executor" as while

class "If condition Executor" as condition

class "Ssh" as ssh

class "OpenC2" as openc2

class "HttpApi" as api

class "Manual" as manual

class "CalderaCmd" as caldera

class "Fin" as fin

action.IExecutor <|.. Executor

ICapability <-up- Executor

ICapability <|.. ssh

ICapability <|.. openc2

ICapability <|.. api

ICapability <|.. manual

ICapability <|.. caldera

ICapability <|.. fin

playbookaction.IExecutor <|.. playbook

ifcondition.IExecutor <|.. condition

whilecondition.IExecutor <|.. while

parallel.IExecutor <|.. parallelexecutor

decomposer -down-> playbookaction.IExecutor

decomposer -down-> ifcondition.IExecutor

decomposer -down-> whilecondition.IExecutor

decomposer -down-> parallel.IExecutor

decomposer -down-> action.IExecutor

Action executor

The action executor consist of the following components

- The capability selector

- Native capabilities (command executors)

- MQTT capability to interact with: Fin capabilities (third-party executors)

The capability selector will select the implementation which is capable of executing the incoming command. There are native capabilities based on the CACAO command-type-ov:

- Currently implemented

- ssh

- http-api

- openc2-http

- powershell

- manual

- Coming soon

- Future (potentially)

Native capabilities

The executor will select a module that is capable of executing the command and pass the details to it. The capability selection is performed based on the agent type (see Agent and Target Common Properties in the CACAO 2.0 spec). The convention is that the agent type must equal soarca-<capability identifier>, e.g. soarca-ssh or soarca-openc2-http.

The result of the step execution will be returned to the decomposer. A result can be either output variables or error status.

MQTT executor -> Fin capabilities

The Executor will put the command on the MQTT topic that is offered by the module. How a module handles this is described in the module documentation and in the fin documentation.

Component overview

package "Controller" {

component Decomposer as parser

}

package "Executor" {

component SSH as exe2

component "HTTP-API" as exe1

component MQTT as exe3

}

package "Fins" {

component "VirusTotal" as virustotal

component "E-mail Sender" as email

}

parser -- Executor

exe3 -- Fins : " MQTT topics"

Sequences

Example execution for SSH commands with SOARCA native capability.

@startuml

participant Decomposer as decomposer

participant "Capability selector" as selector

participant "SSH executor" as ssh

decomposer -> selector : Execute(...)

alt capability in SOARCA

selector -> ssh : execute ssh command

ssh -> ssh :

selector <-- ssh : results

decomposer <-- selector : OnCompletionCallback

else capability not available

decomposer <-- selector : Execution failure

note right: No capability can handle command \nor capability crashed etc..

end

Playbook action executor

The playbook executor handles execution of playbook action steps. The variables from the top level playbook are injected into the be executed playbook.

It could happen that in the downstream playbook the variables collide with the top level playbook. In this case the top level playbook variables are NOT transferred to the downstream playbook. Agents and Targets cannot be transferred between playbooks at this time. Playbooks are only loaded in the executor and then a new Decomposer is created to execute the playbook.

The result of the step execution will be returned to the decomposer. A result can be either output variables or error status.

package playbookaction{

interface IExecutor {

Variables, err Execute(meta, step, variables)

}

}

class "Decomposer" as decomposer

class "Action Executor" as exe

interface "IPlaybookController" as controller

interface "IDatabaseController" as database

playbookaction.IExecutor <|.. exe

decomposer -> playbookaction.IExecutor

exe -> controller

database <- exe

If-condition and While-condition executor

The (if-)condition executor will evaluate a CACAO condition property both for the if-condition step, and in the while-condition step.

The result of the condition evaluation comparison will be returned to the decomposer. The result will determine the ID of the next step that should be executed, and/or error status.

Parallel step executor

The parallel executor will execute the parallel step. This will be done in sequence to simplify implementation. As parallel steps must not depend on each other, sequential execution is possible. Later this will be changed.

7 - Executer Modules

Native executer modules

Executer modules are part of the SOARCA core. Executer modules perform the actual commands in CACAO playbook steps.

Native modules in SOARCA

The following capability modules are currently defined in SOARCA:

- ssh

- http-api

- openc2-http

- powershell

- caldera-cmd

- manual

The capability will be selected based on the agent in the CACAO playbook step. The agent should be of type soarca and have a name corresponding to soarca-[capability name].

SSH capability

This capability executes SSH Commands on the specified targets.

This capability support User Authentication using the user-auth type. For SSH authentication username/password is authentication supported.

Success and failure

The SSH step is considered successful if a proper connection to each target can be initialized, the supplied command executes without error, and returns with zero exit status.

In every other circumstance the step is considered to have failed.

Variables

This module does not define specific variables as input, but variable interpolation is supported in the command and target definitions. It has the following output variables:

{

"__soarca_ssh_result__": {

"type": "string",

"value": "<stdout of the last command>"

}

}

Example

{

"workflow": {

"action--7777c6b6-e275-434e-9e0b-d68f72e691c1": {

"type": "action",

"agent": "soarca--00010001-1000-1000-a000-000100010001",

"targets": ["linux--c7e6af1b-9e5a-4055-adeb-26b97e1c4db7"],

"commands": [

{

"type": "ssh",

"command": "ls -la"

}

]

}

},

"agent_definitions": {

"soarca--00010001-1000-1000-a000-000100010001": {

"type": "soarca",

"name": "soarca-ssh"

}

},

"target_definitions": {

"linux--c7e6af1b-9e5a-4055-adeb-26b97e1c4db7": {

"type": "linux",

"name": "target",

"address": { "ipv4": ["10.0.0.1"] }

}

}

}

HTTP-API capability

This capability implements the HTTP API Command.

Both HTTP Basic Authentication with user_id/password and token based OAuth2 Authentication are supported.

At this time, redirects are not supported.

Success and failure

The command is considered to have successfully completed if a successful HTTP response is returned from each target. An HTTP response is successful if it’s response code is in the range 200-299.

Variables

This capability supports variable interpolation in the command, port, authentication info, and target definitions.

The result of the step is stored in the following output variables:

{

"__soarca_http_api_result__": {

"type": "string",

"value": "<http response body>"

}

}

Example

{

"workflow": {

"action--8baa7c78-751b-4de9-81d4-775806cee0fb": {

"type": "action",

"agent": "soarca--00020001-1000-1000-a000-000100010001",

"targets": ["http-api--4ebae9c3-9454-4e28-b25b-0f43cd97f9e0"],

"commands": [

{

"type": "http-api",

"command": "GET /overview HTTP/1.1",

"port": "8080"

}

]

}

},

"agent_definitions": {

"soarca--00020001-1000-1000-a000-000100010001": {

"type": "soarca",

"name": "soarca-http-api"

}

},

"target_definitions": {

"http-api--4ebae9c3-9454-4e28-b25b-0f43cd97f9e0": {

"type": "http-api",

"name": "target",

"address": { "dname": ["my.server.com"] }

}

}

}

OpenC2 capability

This capability implements the OpenC2 HTTP Command, by sending OpenC2 messages using the HTTPS transport method.

It supports the same authentication mechanisms as the HTTP-API capability.

Success and failure

Any successful HTTP response from an OpenC2 compliant endpoint (with a status code in the range 200-299) is considered a success. Connection failures and HTTP responses outside the 200-299 range are considered a failure.

Variables

It supports variable interpolation in the command, headers, and target definitions.

The result of the step is stored in the following output variables:

{

"__soarca_openc2_http_result__": {

"type": "string",

"value": "<openc2-http response body>"

}

}

Example

{

"workflow": {

"action--aa1470d8-57cc-4164-ae07-05745bef24f4": {

"type": "action",

"agent": "soarca--00030001-1000-1000-a000-000100010001",

"targets": ["http-api--5a274b6d-dc65-41f7-987e-9717a7941876"],

"commands": [{

"type": "openc2-http",

"command": "POST /openc2-api/ HTTP/1.1",

"content_b64": "ewogICJoZWFkZXJzIjogewogICAgInJlcXVlc3RfaWQiOiAiZDFhYzA0ODktZWQ1MS00MzQ1LTkxNzUtZjMwNzhmMzBhZmU1IiwKICAgICJjcmVhdGVkIjogMTU0NTI1NzcwMDAwMCwKICAgICJmcm9tIjogInNvYXJjYS5ydW5uZXIubmV0IiwKICAgICJ0byI6IFsKICAgICAgImZpcmV3YWxsLmFwaS5jb20iCiAgICBdCiAgfSwKICAiYm9keSI6IHsKICAgICJvcGVuYzIiOiB7CiAgICAgICJyZXF1ZXN0IjogewogICAgICAgICJhY3Rpb24iOiAiZGVueSIsCiAgICAgICAgInRhcmdldCI6IHsKICAgICAgICAgICJmaWxlIjogewogICAgICAgICAgICAiaGFzaGVzIjogewogICAgICAgICAgICAgICJzaGEyNTYiOiAiMjJmZTcyYTM0ZjAwNmVhNjdkMjZiYjcwMDRlMmI2OTQxYjVjMzk1M2Q0M2FlN2VjMjRkNDFiMWE5MjhhNjk3MyIKICAgICAgICAgICAgfQogICAgICAgICAgfQogICAgICAgIH0KICAgICAgfQogICAgfQogIH0KfQ==",

"headers": {

"Content-Type": ["application/openc2+json;version=1.0"]

}

}]

}

},

"agent_definitions": {

"soarca--00030001-1000-1000-a000-000100010001": {

"type": "soarca",

"name": "soarca-openc2-http"

}

},

"target_definitions": {

"http-api--5a274b6d-dc65-41f7-987e-9717a7941876": {

"type": "http-api",

"name": "openc2-compliant actuator",

"address": { "ipv4": ["187.0.2.12"] }

}

}

}

PowerShell capability

This capability implements the PowerShell Command, by sending PowerShell commands using the WinRM transport method.

It supports the username, password authentication mechanism.

Success and failure

Any successful command will have a __soarca_powershell_result__. If an error occurs on the target a __soarca_powershell_error__ populated will be returned and Error will be set.

Variables

It supports variable interpolation in the command, headers, and target definitions.

The result of the step is stored in the following output variables:

{

"__soarca_powershell_result__": {

"type": "string",

"value": "<raw powershell output>"

},

"__soarca_powershell_error__": {

"type": "string",

"value": "<raw powershell error output>"

},

}

Example

{

"workflow": {

"action--aa1470d8-57cc-4164-ae07-05745bef24f4": {

"type": "action",

"agent": "soarca--00040001-1000-1000-a000-000100010001",

"targets": ["net-address--d42d6731-791d-41af-8fa4-7b5699dfe402"],

"commands": [{

"type": "powershell",

"command": "pwd"

}]

}

},

"agent_definitions": {

"soarca--00040001-1000-1000-a000-000100010001": {

"type": "soarca",

"name": "soarca-powershell"

}

},

"target_definitions": {

"net-address--d42d6731-791d-41af-8fa4-7b5699dfe402": {

"type": "net-address",

"name": "Windows Server or Client with WinRM enabled",

"address": { "ipv4": ["187.0.2.12"] }

}

}

}

Caldera capability

This capability executes Caldera Abilities on the specified targets by creating an operation on a separate Caldera server.

The server is packaged in the docker build of SOARCA, but can also be provided separably as a stand-alone server.

Success and failure

The Caldera step is considered successful if a connection to the Caldera server can be established, the ability, if supplied as b64command, can be created on the server, an operation can be started on the specified group and adversary, and the operation finished without errors.

In every other circumstance the step is considered to have failed.

Variables

This module does not define specific variables as input, but variable interpolation is supported in the command and target definitions. It has the following output variables:

{

"__soarca_caldera_cmd_result__": {

"type": "string",

"value": ""

}

}

Example

This example will start an operation that executes the ability with ID 36eecb80-ede3-442b-8774-956e906aff02 on the Caldera agent group infiltrators.

{

"workflow": {

"action--7777c6b6-e275-434e-9e0b-d68f72e691c1": {

"type": "action",

"agent": "soarca--00050001-1000-1000-a000-000100010001",

"targets": ["security-category--c7e6af1b-9e5a-4055-adeb-26b97e1c4db7"],

"commands": [

{

"type": "caldera",

"command": "36eecb80-ede3-442b-8774-956e906aff02"

}

]

}

},

"agent_definitions": {

"soarca--00050001-1000-1000-a000-000100010001": {

"type": "soarca",

"name": "soarca-caldera-cmd"

}

},

"target_definitions": {

"linux--c7e6af1b-9e5a-4055-adeb-26b97e1c4db7": {

"type": "security-category",

"name": "infiltrators"

"category": ["caldera"],

}

}

}

Manual capability

This capability executes manual Commands and provides them natively through the SOARCA api, though other integrations are possible.

The manual capability will allow an operator to interact with a playbook. It could allow one to perform a manual step that could not be automated, enter a variable to the playbook execution or a combination of these operations.

The manual step should provide a timeout. SOARCA will by default use a timeout of 10 minutes. If a timeout occurs, the step is considered as failed.

Manual capability architecture

In essence, executing a manual command involves the following actions:

- A message, the

command of a manual command, is posted somewhere, somehow, together with the variables of which values is expected to be assigned or updated (if any). - The playbook execution stops, waiting for something to respond to the message with the variables values.

- Once something replies, the variables are streamed inside the playbook execution and handled accordingly.

It should be possible to post a manual command message anywhere and in any way, and allow anything to respond back. Hence, SOARCA adopts a flexible architecture to accomodate different ways of manual interactions. Below a view of the architecture.

When a playbook execution hits an Action step with a manual command, the ManualCapability will queue the instruction into the CapabilityInteraction module. The module does essentially three things:

- it stores the status of the manual command, and implements the SOARCA API interactions with the manual command.

- If manual integrations are defined for the SOARCA instance, the CapabilityInteraction module notifies the manual integration modules, so that they can handle the manual command in turn.

- It waits for the manual command to be satisfied either via SOARCA APIs, or via manual integrations. The first to respond amongst the two, resolves the manual command. The resolution of the command may or may not assign new values to variables in the playbook. Finally the CapabilityInteraction module replies to the ManualCommand module.

Ultimately the ManualCapability then completes its execution, having eventually updated the values for the variables in the outArgs of the command. Timeouts or errors are handled opportunely.

@startuml

set separator ::

class ManualCommand

protocol ManualAPI {

GET /manual

GET /manual/{exec-id}/{step-id}

POST /manual/continue

}

interface ICapability{

Execute()

}

interface ICapabilityInteraction{

Queue(command InteractionCommand, manualComms ManualCapabilityCommunication)

}

interface IInteracionStorage{

GetPendingCommands() []CommandData

GetPendingCommand(execution.metadata) CommandData

PostContinue(execution.metadata) ExecutionInfo

}

interface IInteractionIntegrationNotifier {

Notify(command InteractionIntegrationCommand, channel manualCapabilityCommunication.Channel)

}

class Interaction {

notifiers []IInteractionIntegrationNotifier

storage map[executionId]map[stepId]InteractionStorageEntry

}

class ThirdPartyManualIntegration

ManualCommand .up.|> ICapability

ManualCommand -down-> ICapabilityInteraction

Interaction .up.|> ICapabilityInteraction

Interaction .up.|> IInteracionStorage

ManualAPI -down-> IInteracionStorage

Interaction -right-> IInteractionIntegrationNotifier

ThirdPartyManualIntegration .up.|> IInteractionIntegrationNotifier

The default and internally-supported way to interact with the manual step is through SOARCA’s manual api.

Besides SOARCA’s manual api, SOARCA is designed to allow for configuration of additional ways that a manual command should be executed. In particular, there can be one manual integration (besides the native manual APIs) per running SOARCA instance.

Integration’s code should implement the IInteractionIntegrationNotifier interface, returning the result of the manual command execution in form of an InteractionIntegrationResponse object, into the respective channel.

The diagram below displays in some detail the way the manual interactions components work.

@startuml

control "ManualCommand" as manual

control "Interaction" as interaction

control "ManualAPI" as api

control "ThirdPartyManualIntegration" as 3ptool

participant "Integration" as integration

-> manual : ...manual command

manual -> interaction : Queue(command, capabilityChannel, timeoutContext)

manual -> manual : idle wait on capabilityChannel

activate manual

activate interaction

interaction -> interaction : save pending manual command

interaction ->> 3ptool : Notify(command, capabilityChannel, timeoutContext)

3ptool <--> integration : custom handling command posting

deactivate interaction

alt Command Response

group Native ManualAPI flow

api -> interaction : GetPendingCommands()

activate interaction

activate api

api -> interaction : GetPendingCommand(execution.metadata)

api -> interaction : PostContinue(ManualOutArgsUpdate)

interaction -> interaction : build InteractionResponse

deactivate api

interaction --> manual : capabilityChannel <- InteractionResponse

manual ->> interaction : timeoutContext.Cancel() event

interaction -> interaction : de-register pending command

deactivate interaction

manual ->> 3ptool : timeoutContext.Deadline() event

activate 3ptool

3ptool <--> integration : custom handling command completed

deactivate manual

<- manual : ...continue execution

deactivate 3ptool

deactivate integration

end

else

group Third Party Integration flow

integration --> 3ptool : custom handling command response

activate manual

activate integration

deactivate integration

activate 3ptool

3ptool -> 3ptool : build InteractionResponse

3ptool --> manual : capabilityChannel <- InteractionResponse

deactivate 3ptool

manual ->> interaction : timeoutContext.Cancel() event

activate interaction

interaction -> interaction : de-register pending command

deactivate interaction

manual ->> 3ptool : timeoutContext.Deadline() event

activate 3ptool

3ptool <--> integration : custom handling command completed

deactivate 3ptool

activate integration

deactivate integration

<- manual : ...continue execution

deactivate manual

deactivate integration

end

end

@enduml

Note that whoever resolves the manual command first, whether via the manualAPI, or a third party integration, then the command results are returned to the workflow execution, and the manual command is removed from the pending list. Hence, if a manual command is resolved e.g. via the manual integration, a postContinue API call for that same command will not go through, as the command will have been resolved already, and hence removed from the registry of pending manual commands.

The diagram below shows instead what happens when a timeout occurs for the manual command.

@startuml

control "ManualCommand" as manual

control "Interaction" as interaction

control "ManualAPI" as api

control "ThirdPartyManualIntegration" as 3ptool

participant "Integration" as integration

-> manual : ...manual command

manual -> interaction : Queue(command, capabilityChannel, timeoutContext)

manual -> manual : idle wait on capabilityChannel

activate manual

activate interaction

interaction -> interaction : save pending manual command

interaction ->> 3ptool : Notify(command, capabilityChannel, timeoutContext)

3ptool --> integration : custom handling command posting

deactivate interaction

group Command execution times out

manual -> manual : timeoutContext.Deadline()

manual ->> interaction : timeoutContext.Deadline() event

manual ->> 3ptool : timeoutContext.Deadline() event

3ptool --> integration : custom handling command timed-out view

activate interaction

interaction -> interaction : de-register pending command

<- manual : ...continue execution

deactivate manual

...

api -> interaction : GetPendingCommand(execution.metadata)

interaction -> api : no pending command (404)

end

@enduml

Success and failure

In SOARCA the manual step is considered successful if a response is made through the manual api, or an integration. The manual command can specify a timeout, but if none is specified SOARCA will use a default timeout of 10 minutes. If a timeout occurs the step is considered as failed and SOARCA will return an error to the decomposer.

Variables

This module does not define specific variables as input, but it requires one to use out_args if an operator want to provide a response to be used later in the playbook.

Example

{

"workflow": {

"action--7777c6b6-e275-434e-9e0b-d68f72e691c1": {

"type": "action",

"agent": "soarca--00010001-1000-1000-a000-000100010001",

"targets": ["linux--c7e6af1b-9e5a-4055-adeb-26b97e1c4db7"],

"commands": [

{

"type": "manual",

"command": "Reset the firewall by unplugging it"

}

]

}

},

"agent_definitions": {

"soarca--00040001-1000-1000-a000-000100010001": {

"type": "soarca",

"name": "soarca-manual"

}

},

"target_definitions": {

"linux--c7e6af1b-9e5a-4055-adeb-26b97e1c4db7": {

"type": "linux",

"name": "target",

"address": { "ipv4": ["10.0.0.1"] }

}

}

}

MQTT fin module

This module is used by SOARCA to communicate with fins (capabilities) see fin documentation for more information

8 - Database

Database details of SOARCA

OARCA Database architecture, SOARCA makes use of MongoDB It is used to store and retrieve playbooks. Later it will also store individual steps.

Mongo

SOARCA employs separate collections in Mongo, utilizing a dedicated database object for each of them:

Interface IDatabase{

void create(JsonData playbook)

JsonData read(Id playbookId)

void update(Id playbookId, JsonData playbook)

void remove(Id playbookId)

}

Interface IPlaybookDatabase

Interface IStepDatabase

class Controller

class PlaybookDatabase

class Mongo

Controller -> IPlaybookDatabase

IStepDatabase <- Controller

IPlaybookDatabase <|.. PlaybookDatabase

IStepDatabase <|.. StepDatabase

StepDatabase -> IDatabase

IDatabase <- PlaybookDatabase

IDatabase <|.. Mongo

Getting data

Getting playbook data

participant Controller as controller

participant "Playbook Database" as playbook

database Database as db

controller -> playbook : get(id)

playbook -> db : read(playbookId)

note right

When the create fails an error will be thrown

end note

playbook <-- db : "playbook JSON"

controller <-- playbook: "CacaoPlaybook Object"

Writing playbook data

participant Controller as controller

participant "Playbook Database" as playbook

database Database as db

controller -> playbook : set(CacaoPlaybook Object)

playbook -> db : create(playbook JSON)

note right

When the create fails an error will be thrown

end note

Update playbook data

participant Controller as controller

participant "Playbook Database" as playbook

database Database as db

controller -> playbook : update(CacaoPlaybook Object)

playbook -> db : update(playbook id,playbook JSON)

note right

When the create fails an error will be thrown

end note

playbook <-- db : true

controller <-- playbook: true

Delete playbook data

participant Controller as controller

participant "Playbook Database" as playbook

database Database as db

controller -> playbook : remove(playbook id)

playbook -> db : remove(playbook id)

note right

When the create fails an error will be thrown

end note

Handling an error

participant Controller as controller

participant "Playbook Database" as playbook

database Database as db

controller -> playbook : remove(playbook id)

playbook -> db : remove(playbook id)

playbook <-- db: error

note right

playbook does not exists

end note

controller <-- playbook: error

9 - Reporter

Reporting of Playbook worfklow information and steps execution

SOARCA utilizes push-based reporting to provide information on the instantiation of a CACAO workflow, and information on the execution of workflow steps.

General Reporting Architecture

For the execution of a playbook, a Decomposer and invoked Executors are injected with a Reporter. The Reporter maintains the reporting logic that reports execution information to a set of specified and available targets.

A reporting target can be internal to SOARCA, such as a Cache. A reporting target can also be a third-party tool, such as an external SOAR/ SIEM, or incident case management system.

Upon execution trigger for a playbook, information about the chain of playbook steps to be executed will be pushed to the targets via dedicated reporting classes.

Along the execution of the workflow steps, the reporting classes will dynamically update the steps execution information such as output variables, and step execution success or failure.

The reporting features will enable the population and updating of views and data concerning workflow composition and its dynamic execution results. This data can be transmitted to SOARCA internal reporting components such as a cache, as well as to third-party tools.

The schema below represents the architecture concept.

@startuml

set separator ::

interface IStepReporter{

ReportStep() error

}

interface IWorkflowReporter{

ReportWorkflow() error

}

interface IDownStreamReporter {

ReportWorkflow() error

ReportStep() error

}

class Reporter {

reporters []IDownStreamReporter

RegisterReporters() error

ReportWorkflow()

ReportStep()

}

class Cache

class 3PTool

class Decomposer

class Executor

Decomposer -right-> IWorkflowReporter

Executor -left-> IStepReporter

Reporter .up.|> IStepReporter

Reporter .up.|> IWorkflowReporter

Reporter -right-> IDownStreamReporter

Cache .up.|> IDownStreamReporter

3PTool .up.|> IDownStreamReporter

Interfaces

The reporting logic and extensibility is implemented in the SOARCA architecture by means of reporting interfaces. At this stage, we implement an IWorkflowReporter to push information about the entire workflow to be executed, and an IStepReporter to push step-specific information as the steps of the workflow are executed.

A high level Reporter component will implement both interfaces, and maintain the list of DownStreamRepporters activated for the SOARCA instance. The Reporter class will invoke all reporting functions for each active reporter. The Executer and Decomposer components will be injected each with the Reporter though, as interface of respectively workflow reporter, and step reporter, to keep the reporting scope separated.

The DownStream reporters will implement push-based reporting functions specific for the reporting target, as shown in the IDownStreamReporter interface. Internal components to SOARCA, and third-party tool reporters, will thus implement the IDownStreamReporter interface.

Future plans

At this stage, third-party tools integrations may be built in SOARCA via packages implementing reporting logic for the specific tools. Alternatively, third-party tools may implement pull-based mechanisms (via the API) to get information from the execution of a playbook via SOARCA.

In the near future, we will (also) make available a SOARCA Report API that can establish a WebSocket connection to a third-party tool. As such, this will thus allow SOARCA to push execution updates as they come to third-party tools, without external tools having to poll SOARCA.

Native Reporters

SOARCA implements internally reporting modules to handle database and caches reporting.

Cache reporter

The Cache reporter mediates between decomposer and executors, database, and reporting APIs. As DownStreamReporter, the Cache stores workflow and step reports in-memory for an ongoing execution. As IExecutionInformant, the Cache provides information to the reporting API. The schema below shows how it is positioned in the SOARCA architecture.

@startuml

protocol /reporter

interface IDownStreamReporter {

ReportWorkflow() error

ReportStep() error

}

interface IDatabase

interface IExecutionInformer

class ReporterApi

class Reporter

class Cache {

cache []ExecutionEntry

}

"/reporter" -right-> ReporterApi

Reporter -> IDownStreamReporter

Cache -left-> IDatabase

Cache .up.|> IDownStreamReporter

Cache .up.|> IExecutionInformer

ReporterApi -down-> IExecutionInformer

The Cache thus reports the execution information downstream both in the database, and in memory. Upon execution information requests from the /reporter API, the cache can provide information fetching either from memory, or querying the database.

10 - Logging

SOARCA support extensive logging. Logging is based on the

logrus framework.

SOARCA supports extensive logging. Logging is based on the logrus framework.

Logging can be done in different formats suitable for your application. The following formats are available:

Destination

- std::out

default (terminal) - To file (to log file path)

Later:

- syslog (NOT YET IMPLEMENTED)

Log levels

SOARCA supports the following log levels. Also is indicated how they are used.

PANIC (non fixable error system crash)FATAL (non fixable error, restart would fix)ERROR (operation went wrong but can be caught by other higher component)WARNING (let the user know some operation might not have the expected result but execution can continue on normal path)INFO default (let the user know that a major event has occurred)DEBUG (add some extra detail to normal execution paths)TRACE (get some fine grained detail from the logging)

Types of logging

SOARCA will log different information, these will be combined in the same output.

Runtime logging

Runtime logging wil include the running state of SOARCA, errors encountered when registering modules etc.

Security event logging

Will log the status of the execution of an playbook, database updates of playbooks

Using the logger (developer)

To use SOARCA logging you can add the following to your module.

type YourModule struct {

}

var component = reflect.TypeOf(YourModule{}).PkgPath()

var log *logger.Log

func init() {

log = logger.Logger(component, logger.Info, "", logger.Json)

}

Changing log level

To change logging for your SOARCA instance you can use the following environment variables

| variable | content | description |

|---|

| LOG_GLOBAL_LEVEL | [Log levels] | One of the specified log levels. Defaults to info |

| LOG_MODE | development | production | If production is chosen the LOG_GLOBAL_LEVEL is used for all modules defaults to production |

| LOG_FILE_PATH | filepath | Path to the logfile you want to use for all logging. Defaults to "" (empty string) |

| LOG_FORMAT | text | json | The logging can be in plain text format or in JSON format. Defaults to json |

This can be set as environment variables or loaded through the .env

LOG_GLOBAL_LEVEL: "info"

LOG_MODE: "production"

LOG_FILE_PATH: ""

LOG_FORMAT: "json"